If you’re wondering how to edit a live survey, you’ve missed the most critical step in the survey design process. What kind of testing did you do?

- “Testing” = I clicked the link and it opened, so I guess it’s good to go.

- Real Testing = I totally understand the goals of this project, the purpose of each question, the possible answers, the requirements of the audience — and I’ve anticipated and addressed every possible point of participant confusion — and I’ve collected feedback from every project partner and stakeholder and we’re good to go.

Unfortunately, in the run-up to a project launch, “testing” is much too common.

Of course, things happen. Even in our most perfect projects, requirements can change. Mistakes arise. “I thought it would work that way” meets “That’s not how it’s supposed to work at all.”

Also, of course, nobody reaching out for help in these stressful situations wants to get a shrug and a “Better luck next time!” When is it too late to fix the problem — and is it always too late?

Answer 1: It’s always too late

Imagine that you’re conducting a survey and you ask participants to choose their favorite color. Reviewing the results a few days later, you see that blue and green are at the top of the list — but you suddenly realize that you forgot to add red as an option. What to do?

- If you don’t add red, your results may not actually be representative of your participants’ true favorite colors.

- If you do add red, it’s already “behind” in the ratings and can’t possibly be selected as many times as those colors that were always present. This means your report won’t fairly or accurately represent the selections participants might have chosen if all colors were present from the start.

Those reviewing the results might even accuse the designers from intentionally excluding red from the original options — perhaps because of a bias against red or in favor of another color. Shocking! While the question of favorite color is an overly simplified example, the illustration is clear.

Whether it’s adding or removing an answer option, adding or removing a question, or changing an existing question or answer, editing a live survey can result in complicated questions on the reporting end. A biased researcher might leave out any explanation, but the ethical research will be forced to include asterisks, footnotes, and narrative justification, at the very least. At worst, a researcher may have to completely toss out some early pre-edit responses as invalid, or scrap the entire project.

Answer 2: It’s sometimes okay

Imagine that you’re conducting a survey and you include a mandatory question about primary work location. Within an hour of launching, you’ve already heard from a dozen people telling you that they won’t be participating because you’ve left Herndon, Virginia, off of the list. What to do?

- If you don’t add Herndon, many people will choose not to participate because they’ve been left out. Worse, some may choose to select a completely different location, just to finish and be done with it. In the latter case, your data validity plummets.

- If you do add Herndon, you’ll probably have to follow up with those initial dozen people and let them know that you’ve made the update and they’re re-invited to respond.

In this case, it probably makes more sense to add the answer option than to leave it off. Here you’re asking for more of a demographic segmenting question rather than an opinion, so it’s less likely to be seen as an attempt to bias results. Still, there’s nothing quite so disengaging for a participant as realizing that the survey designer totally forgot about them.

Answer 3: Check your tech options

From a research perspective, editing a live survey is fraught with issues. You wouldn’t change protocols halfway through a series of focus groups or one-on-one interviews, and you shouldn’t mess with a validated research instrument halfway through a study.

Technical tools, however, can empower researchers to make the right decision for each project. “Allowing” isn’t the same as “encouraging”, though, so keep in mind that your reports should reflect any changes that you made. Keep careful notes about how and when any changes were made so that any possible impact on results can be assessed in later analysis.

How to edit a live survey: Basics

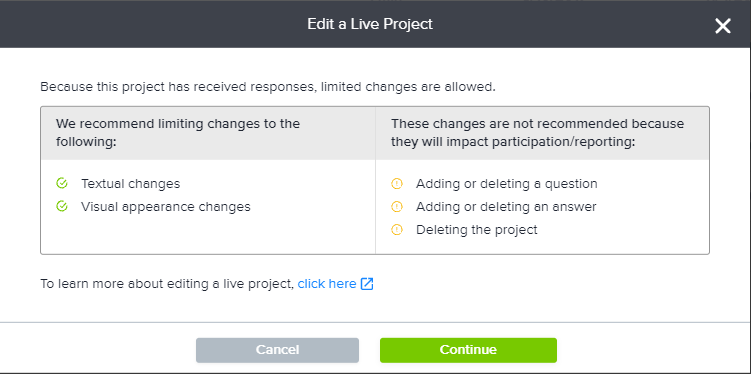

First: Remember that most platforms won’t allow you to change everything you want, and also that your options will vary by package. When you open a live project that has already received responses, you’ll see a warning. You always read these kinds of messages, right?

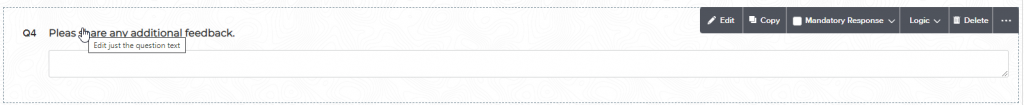

Edit Text: If you just need to edit the text of a question or an answer, hover over the text and then click. If you click Edit in the menu bar that appears to the right, the system will think you’re trying to change the entire question, and you’ll have limited options.

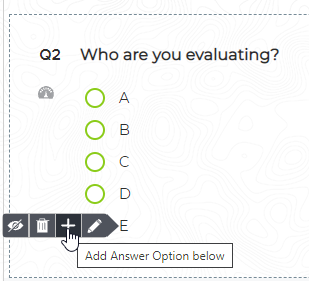

Update Answers: If you need to add or delete an answer option in a live survey, hover to display your options.

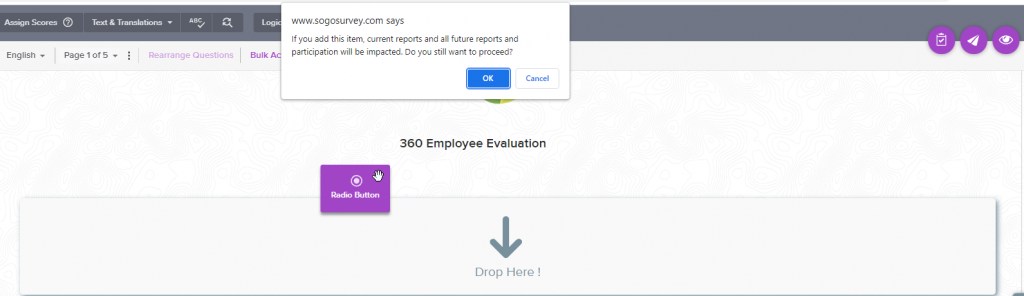

Update Questions: If you’re trying to add or delete a question in a live survey, you’ll see a warning. Heads up: Do you know what you’re doing and why?

How to edit a live survey: Advanced

Despite your best laid plans and it-seemed-like-enough-at-the-time testing, bigger problems can happen. At some point, you may not be able to salvage your current survey as it is and you may need to opt for an alternative. Either that, or you could just let it go on burning, right? Probably not. Even though you do need to prioritize the value of you results, you also need to prioritize the experience of your participants. If you’ve created a mess for them, the best possible thing you can do is gracefully clear the mess, excuse yourself, and offer an alternative as soon as possible.

The two most common up-in-flames fails that we see are (1) issues with logic, (2) issues with pre-population, and (3) issues with anonymity. Handled well, these are amazing features that have a hugely positive impact on both the participant experience and the quality of data collected. If they’re handled poorly — or forgotten about completely — that’s bad news all around.

If you find that you’ve published a project that wasn’t set up correctly with regard to logic, pre-population, or anonymity, you have two options. You can make a copy, fix the mistakes, and send the new link or invitations to your participants (along with an apology). Or, if you have Switch Invitations in your account, you can magically redirect participants’ invitation links to the fixed copy of the survey — without having to embarrass yourself. Of course, if you’ve already received responses in the original, you may choose to either exclude those responses in reporting or send new invitations to those participants.

Letter to the editors

No matter the changes you make, you’ll have some decisions to sort out in your final reports. Keep track of the updates and be sure that you can justify any changes you’ve made. Ultimately, you want to get good data, but that also means building trust with your participants. If they have concerns that your research is unfair or biased in any way, they’re less likely to respond in the future.

Got a sticky situation? Email us or book a support call from within your account and let’s talk it through.